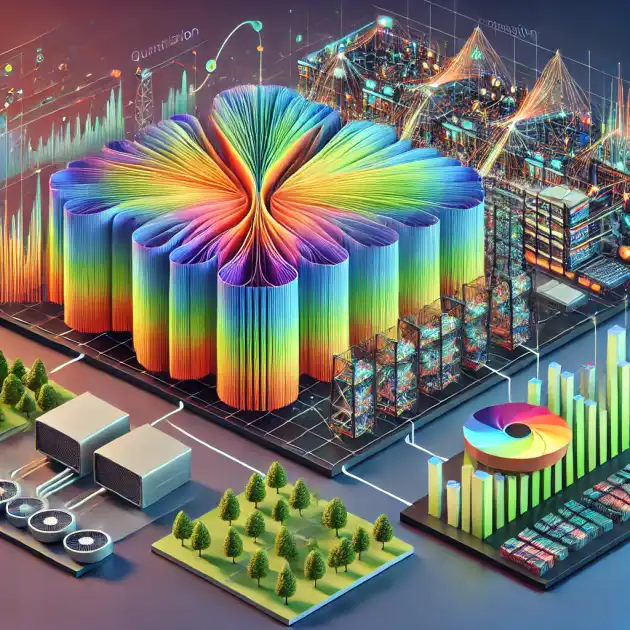

Minimizing GPU RAM and Scaling Model Training Horizontally with Quantization and Distributed Training

Training multibillion-parameter models in machine learning poses significant challenges, particularly concerning GPU memory limitations. A single NVIDIA A100 or H100 GPU, with its 80 GB of GPU RAM, often falls short when handling 32-bit full-precision models. This blog post will delve into two powerful techniques to overcome these challenges: quantization and distributed training.

Quantization: Reducing Precision to Conserve Memory

Quantization is a process that reduces the precision of model weights, thereby decreasing the memory required to load and train the model. This technique projects higher-precision floating-point numbers into a lower-precision target set, significantly cutting down the memory footprint.

How Quantization Works

Quantization involves the following steps:

Scaling Factor Calculation: Determine a scaling factor based on the range of source (high-precision) and target (low-precision) numbers.

Projection: Map the high-precision numbers to the lower-precision set using the scaling factor.

Storage: Store the projected numbers in the reduced precision format.

For instance, converting model parameters from 32-bit precision (fp32) to 16-bit precision (fp16 or bfloat16) or even 8-bit (int8) or 4-bit precision can drastically reduce memory usage. Quantizing a 1-billion-parameter model from 32-bit to 16-bit precision can reduce the memory requirement by 50%, down to approximately 2 GB. Further reduction to 8-bit precision can lower this to just 1 GB, a 75% reduction.

Choosing the Right Data Type

The choice of data type for quantization depends on the specific needs of your application:

fp32: Offers the highest accuracy but is memory-intensive and may exceed GPU RAM limits for large models.

fp16 and bfloat16: These halve the memory footprint compared to fp32. bfloat16 is preferred over fp16 due to its ability to maintain the same dynamic range as fp32, reducing the risk of overflow.

fp8: An emerging data type that further reduces memory and compute requirements, showing promise as hardware and framework support increases.

int8: Commonly used for inference optimization, significantly reducing memory usage.

Distributed Training: Scaling Horizontally Across GPUs

When a single GPU's memory is insufficient, distributing the training process across multiple GPUs is necessary. Distributed training allows for scaling the model horizontally, leveraging the combined memory and computational power of multiple GPUs.

Approaches to Distributed Training

Data Parallelism: Each GPU holds a complete copy of the model but processes different mini-batches of data. Gradients from each GPU are averaged and synchronized at each training step.

Pros: Simple to implement, suitable for models that fit within a single GPU’s memory.

Cons: Limited by the size of the model that can fit into a single GPU.

Model Parallelism: The model is partitioned across multiple GPUs. Each GPU processes a portion of the model, handling the corresponding part of the input data.

Pros: Effective for extremely large models that cannot fit into a single GPU’s memory.

Cons: More complex to implement, communication overhead can be significant.

Pipeline Parallelism: Combines aspects of data and model parallelism. The model is divided into stages, with each stage assigned to different GPUs. Data flows through these stages sequentially.

Pros: Balances the benefits of data and model parallelism, suitable for very deep models.

Cons: Introduces pipeline bubbles and can be complex to manage.

Implementing Distributed Training

To implement distributed training effectively:

Framework Support: Utilize frameworks like TensorFlow, PyTorch, or MXNet, which offer built-in support for distributed training.

Efficient Communication: Ensure efficient communication between GPUs using technologies like NCCL (NVIDIA Collective Communications Library).

Load Balancing: Balance the workload across GPUs to prevent bottlenecks.

Checkpointing: Regularly save model checkpoints to mitigate the risk of data loss during training.

Conclusion

Combining quantization and distributed training offers a robust solution for training large-scale models within the constraints of available GPU memory. Quantization significantly reduces memory requirements, while distributed training leverages multiple GPUs to handle models that exceed the capacity of a single GPU. By effectively applying these techniques, you can optimize GPU usage, reduce training costs, and achieve scalable performance for your machine learning models.