Understanding Transformer Architecture in Large Language Models

In the ever-evolving field of artificial intelligence, language models have emerged as a cornerstone of modern technological advancements. Large Language Models (LLMs) like GPT-3 have not only captured the public's imagination but have also fundamentally changed how we interact with machines. At the heart of these models lies an innovative structure known as the transformer architecture, which has revolutionized the way machines understand and generate human language.

The Basics of Transformer Architecture

The transformer model, introduced in the paper "Attention is All You Need" by Vaswani et al. in 2017, moves away from traditional recurrent neural network (RNN) approaches. Unlike RNNs that process data sequentially, transformers use a mechanism called self-attention to process all words in a sentence concurrently. This allows the model to learn the context of a word in relation to all other words in the sentence, rather than just those immediately adjacent to it.

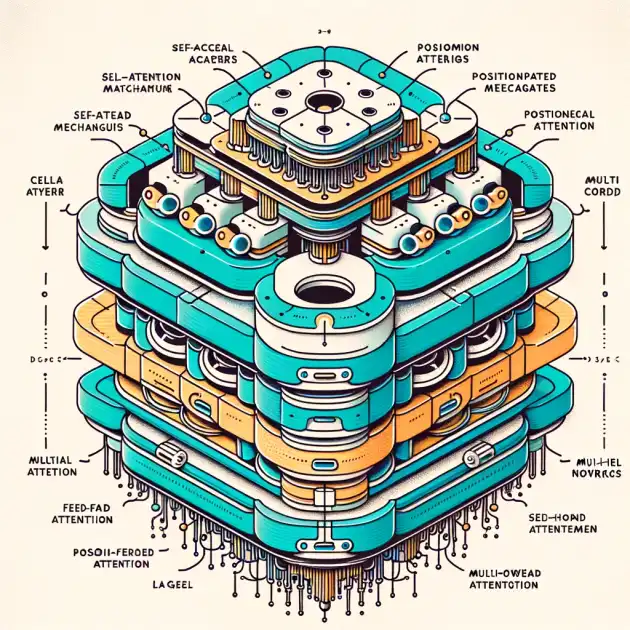

Key Components of the Transformer

Self-Attention: This crucial component helps the transformer understand the dynamics of language by letting it weigh the importance of each word in a sentence, regardless of their positional distances. For instance, in the sentence "The bank heist was foiled by the police," self-attention allows the model to associate the word "bank" with "heist" strongly, even though they are not next to each other.

Positional Encoding: Since transformers do not process words sequentially, they use positional encodings to include information about the position of each word in the input sequence. This ensures that words are used in their correct contexts.

Multi-Head Attention: This feature of the transformer allows it to direct its attention to different parts of the sentence simultaneously, providing a richer understanding of the context.

Feed-Forward Neural Networks: Each layer of a transformer contains a feed-forward neural network which applies the same operation to different positions separately and identically. This layer helps in refining the outputs from the attention layer.

Training Transformers

Transformers are typically trained in two phases: pre-training and fine-tuning. During pre-training, the model learns general language patterns from a vast corpus of text data. In the fine-tuning phase, the model is adjusted to perform specific tasks such as question answering or sentiment analysis. This methodology of training, known as transfer learning, allows for the application of a single model to a wide range of tasks.

Applications of Transformer Models

The versatility of transformer models is evident in their range of applications. From powering complex language understanding tasks such as in Google’s BERT for better search engine results, to providing the backbone for generative tasks like OpenAI's GPT-3 for content creation, transformers are at the forefront of NLP technology. They are also crucial in machine translation, summarization, and even in the development of empathetic chatbots.

Challenges and Future Directions

Despite their success, transformers are not without challenges. Their requirement for substantial computational resources makes them less accessible to the broader research community and raises environmental concerns. Additionally, they can perpetuate biases present in their training data, leading to fairness and ethical issues.

Ongoing research aims to tackle these problems by developing more efficient transformer models and methods to mitigate biases. The future of transformers could see them becoming even more integral to an AI-driven world, influencing fields beyond language processing.

Conclusion

The transformer architecture has undeniably reshaped the landscape of artificial intelligence by enabling more sophisticated and versatile language models. As we continue to refine this technology, its potential to expand and enhance human-machine interaction is boundless.

Explore the capabilities of transformer models by experimenting with platforms like Hugging Face, which provide access to pre-trained models and the tools to train your own. Dive into the world of transformers and discover the future of AI!

Further Reading and References

Vaswani, A., et al. (2017). Attention is All You Need.

Devlin, J., et al. (2019). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.

Brown, T., et al. (2020). Language Models are Few-Shot Learners.