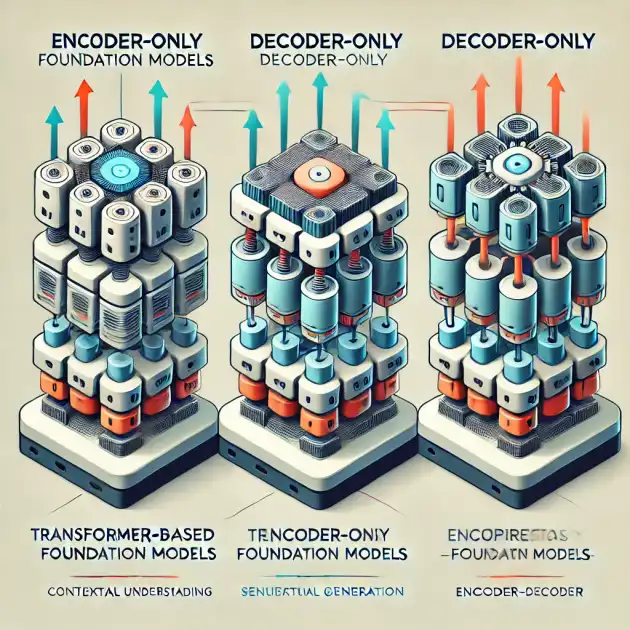

Types of Transformer-Based Foundation Models

Transformer-based foundation models have revolutionized natural language processing (NLP) and are categorized into three primary types: encoder-only, decoder-only, and encoder-decoder models. Each type is trained using a specific objective function and is suited for different types of generative tasks. Let’s dive deeper into each variant and understand their unique characteristics and applications.

Encoder-Only Models (Autoencoders)

Training Objective: Masked Language Modeling (MLM)

Encoder-only models, commonly referred to as autoencoders, are pretrained using masked language modeling. This technique involves randomly masking input tokens and training the model to predict these masked tokens. By doing so, the model learns to understand the context of a token based on both its preceding and succeeding tokens, which is often called a denoising objective.

Characteristics

Bidirectional Representations: Encoder-only models leverage bidirectional representations, enabling them to understand the full context of a token within a sentence.

Embedding Utilization: The embeddings generated by these models are highly effective for tasks that require understanding of text semantics.

Applications

Text Classification: These models are particularly useful for text classification tasks where understanding the context and semantics of the text is crucial.

Semantic Similarity Search: Encoder-only models can power advanced document-search algorithms that go beyond simple keyword matching, providing more accurate and relevant search results.

Example: BERT

A well-known example of an encoder-only model is BERT (Bidirectional Encoder Representations from Transformers). BERT's ability to capture contextual information has made it a powerful tool for various NLP tasks, including sentiment analysis and named entity recognition.

Decoder-Only Models (Autoregressive Models)

Training Objective: Causal Language Modeling (CLM)

Decoder-only models, or autoregressive models, are pretrained using unidirectional causal language modeling. In this approach, the model predicts the next token in a sequence using only the preceding tokens, ensuring that each prediction is based solely on the information available up to that point.

Characteristics

Unidirectional Representations: These models generate text by predicting one token at a time, using previously generated tokens as context.

Generative Capabilities: They are well-suited for generative tasks, producing coherent and contextually relevant text outputs.

Applications

Text Generation: Autoregressive models are the standard for tasks requiring text generation, such as chatbots and content creation.

Question-Answering: These models excel in generating accurate and contextually appropriate answers to questions based on given prompts.

Examples: GPT-3, Falcon, LLaMA

Prominent examples of decoder-only models include GPT-3, Falcon, and LLaMA. These models have gained widespread recognition for their ability to generate human-like text and perform a variety of NLP tasks with high proficiency.

Encoder-Decoder Models (Sequence-to-Sequence Models)

Training Objective: Span Corruption

Encoder-decoder models, often called sequence-to-sequence models, utilize both the encoder and decoder components of the Transformer architecture. A common pretraining objective for these models is span corruption, where consecutive spans of tokens are masked and the model is trained to reconstruct the original sequence.

Characteristics

Dual Components: These models use an encoder to process the input sequence and a decoder to generate the output sequence, making them highly versatile.

Contextual Understanding: By leveraging both encoder and decoder, these models can effectively translate, summarize, and generate text.

Applications

Translation: Originally designed for translation tasks, sequence-to-sequence models excel in converting text from one language to another while preserving meaning and context.

Text Summarization: These models are also highly effective in summarizing long texts into concise and informative summaries.

Examples: T5, FLAN-T5

The T5 (Text-to-Text Transfer Transformer) model and its fine-tuned version, FLAN-T5, are well-known examples of encoder-decoder models. These models have been successfully applied to a wide range of generative language tasks, including translation, summarization, and question-answering.

Summary

In conclusion, transformer-based foundation models are categorized into three distinct types, each with unique training objectives and applications:

Encoder-Only Models (Autoencoding): Best suited for tasks like text classification and semantic similarity search, with BERT being a prime example.

Decoder-Only Models (Autoregressive): Ideal for generative tasks such as text generation and question-answering, with examples including GPT-3, Falcon, and LLaMA.

Encoder-Decoder Models (Sequence-to-Sequence): Versatile models excelling in translation and summarization tasks, represented by models like T5 and FLAN-T5.

Understanding the strengths and applications of each variant helps in selecting the appropriate model for specific NLP tasks, leveraging the full potential of transformer-based architectures.